Platform Strategy and Its Discontents

The web is losing. Badly. But a comeback is possible.

This post is an edited and expanded version of a now-mangled Mastodon thread.

Some in the JavaScript community imagine that I harbour an irrational dislike of their tools when, in fact, I want nothing more than to stop thinking about them. Live-and-let-live is excellent guidance, and if it weren't for React et. al.'s predictably ruinous outcomes, the public side of my work wouldn't involve educating about the problems JS-first development has caused.

But that's not what strategy demands, and strategy is my job.[1]

I've been holding my fire (and the confidences of consulting counterparties) for most of the last decade. Until this year, I only occasionally posted traces documenting the worsening rot. I fear this has only served to make things look better than they are.

Over the past decade, my work helping teams deliver competitive PWAs gave me a front-row seat to a disturbing trend. The rate of failure to deliver usable experiences on phones was increasing over time, despite the eye-watering cost of JS-based stacks teams were reaching for. Worse and costlier is a bad combo, and the opposite of what competing ecosystems did.

Native developers reset hard when moving from desktop to mobile, getting deeply in touch with the new constraints. Sure, developing a codebase multiple times is more expensive than the web's write-once-test-everywhere approach, but at least you got speed for the extra cost.

That's not what web developers did. Contemporary frontend practice pretended that legacy-oriented, desktop-focused tools would perform fine in this new context, without ever checking if they did. When that didn't work, the toxic-positivity crowd blamed the messenger.[2]

Frontend's tragically timed turn towards JavaScript means the damage isn't limited to the public sector or "bad" developers. Some of the strongest engineers I know find themselves mired in the same quicksand. Today's popular JS-based approaches are simply unsafe at any speed. The rot is now ecosystem-wide, and JS-first culture owns a share of the responsibility.

But why do I care?

Platforms Are Competitions #

I want the web to win.

What does that mean? Concretely, folks should be able to accomplish most of their daily tasks on the web. But capability isn't sufficient; for the web to win in practice, users need to turn to the browser for those tasks because it's easier, faster, and more secure.

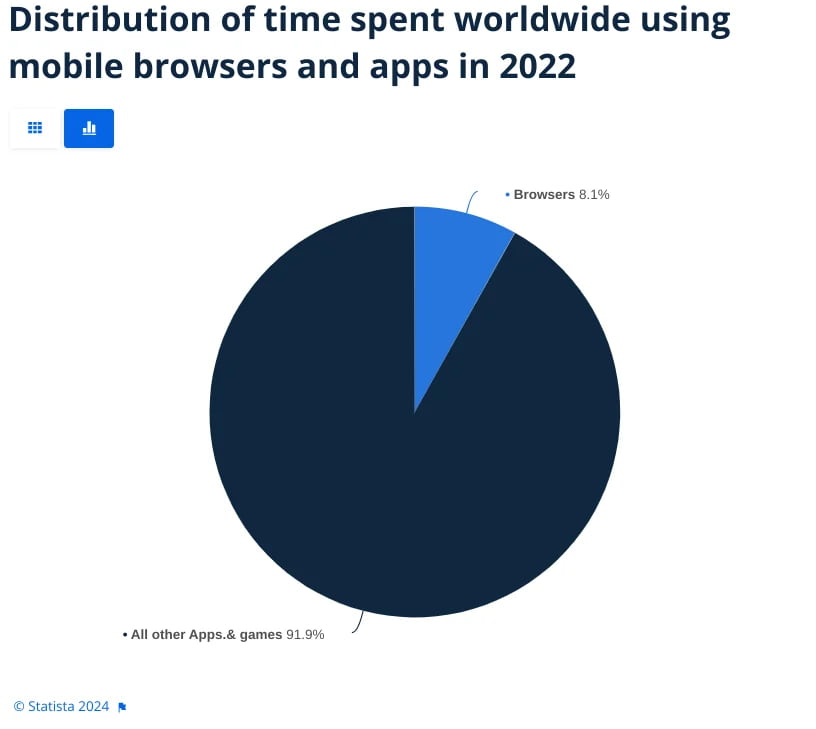

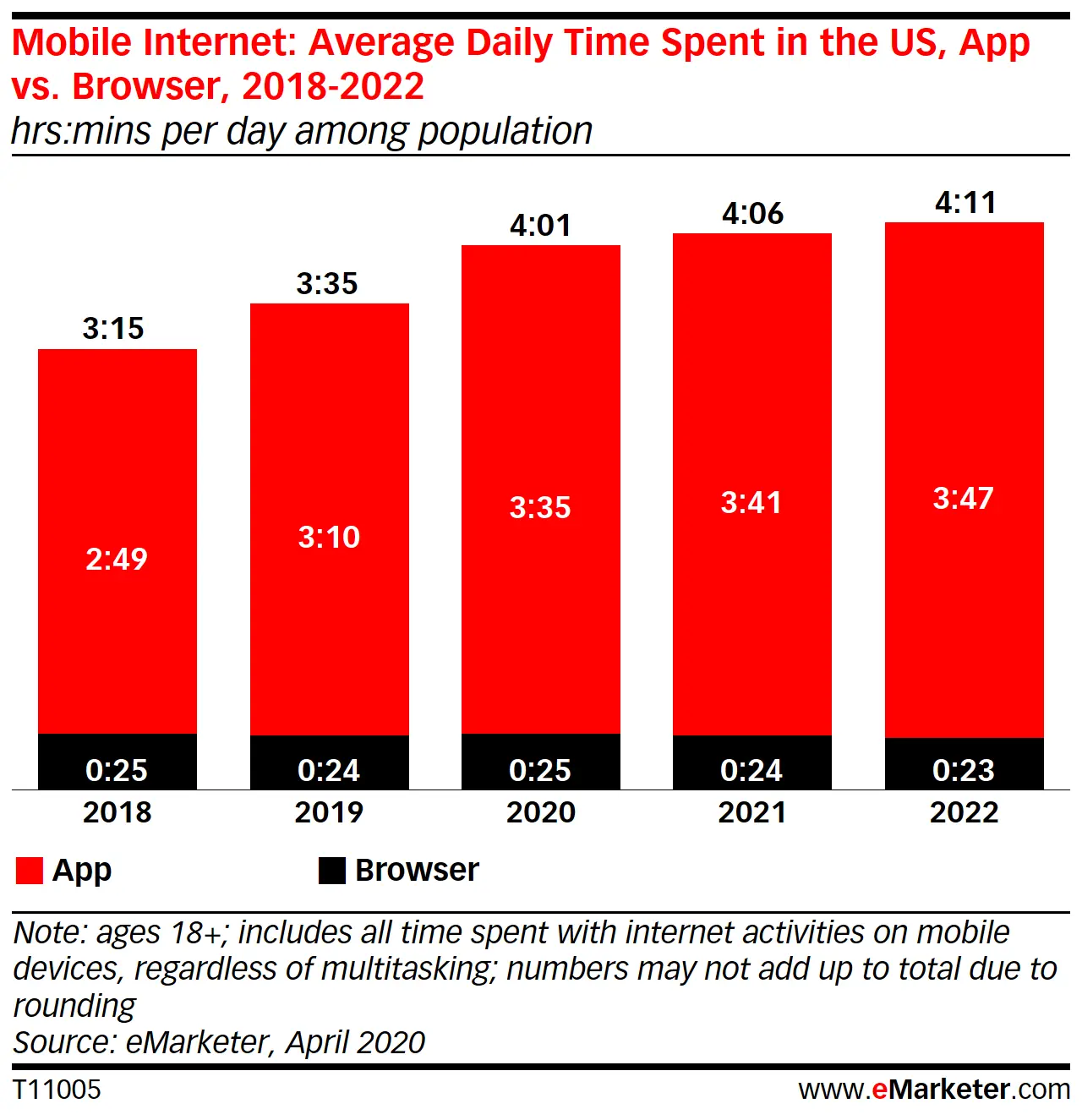

A reasonable metric of success is time spent as a percentage of time on device.[3]

But why should we prefer one platform over another when, in theory, they can deliver equivalently good experiences?

As I see it, the web is the only generational software platform that has a reasonable shot at delivering a potent set of benefits to users:

- Fresh

- Frictionless

- Safe by default

- Portable and interoperable

- Gatekeeper-free (no prior restraint on publication)[4]

- Standards-based, and therefore...

- User-mediated (extensions, browser settings, etc.)

- Open Source compatible

No other successful platform provides all of these, and others that could are too small to matter.

Platforms like Android and Flutter deliver subsets of these properties but capitulate to capture by the host OS agenda, allowing their developers to be taxed through app stores and proprietary API lock-in. Most treat user mediation like a bug to be fixed.

The web's inherent properties have created an ecosystem that is unique in the history of software, both in scope and resilience.

...and We're Losing #

So why does this result in intermittent antagonism towards today's JS community?

Because the web is losing, and instead of recognising that we're all in it together, then pitching in to right the ship, the Lemon Vendors have decided that predatory delay and "I've got mine, Jack"-ism is the best response.

What do I mean by "losing"?

Going back to the time spent metric, the web is cleaning up on the desktop. The web's JTBD percentage and fraction of time spent both continue to rise as we add new capabilities to the platform, displacing other ways of writing and delivering software, one fraction of a percent every year.[5]

The web is desktop's indispensable ecosystem. Who, a decade ago, thought the web would be such a threat to Adobe's native app business that it would need to respond with a $20BN acquisition attempt and a full-fledged version of Photoshop (real Photoshop) on the web?

Model advantages grind slowly but finely. They create space for new competitors to introduce the intrinsic advantages of their platform in previously stable categories. But only when specific criteria are met.

Win Condition #

First and foremost, challengers need a cost-competitive channel. That is, users have to be able to acquire software that runs on this new platform without a lot of extra work. The web drops channel costs to nearly zero, assuming...

80/20 capability. Essential use-cases in the domain have to be (reliably) possible for the vast majority (90+%) of the TAM. Some nice-to-haves might not be there, but the model advantage makes up for it. Lastly...

It has to feel good. Performance can't suck for core tasks.[6] It's fine for UI consistency with native apps to wander a bit.[7] It's even fine for there to be a large peak performance delta. But the gap can't be such a gulf that it generally changes the interaction class of common tasks.

So if the web is meeting all these requirements on desktop – even running away with the lead – why am I saying "the web is losing"?

Because more than 75% of new devices that can run full browsers are phones. And the web is getting destroyed on mobile.

Utterly routed.

This is what I started warning about in 2019, and more recently on this blog. The terrifying data I had access to five years ago is now visible from space.

If that graph looks rough-but-survivable, understand that it's only this high in the US (and other Western markets) because the web was already a success in those geographies when mobile exploded.

That history isn't shared in the most vibrant growth markets, meaning the web has fallen from "minuscule" to "nonexistent" as a part of mobile-first daily life globally.

This is the landscape. The web is extremely likely to get cast in amber and will, in less than a technology generation, become a weird legacy curio.

What happens then? The market for web developers will stop expanding, and the safe, open, interoperable, gatekeeper-free future for computing will be entirely foreclosed — or at least the difficulty will go from "slow build" to "cold-start problem"; several orders of magnitude harder (and therefore unlikely).

This failure has many causes, but they're all tractable. This is why I have worked so hard to close the capability gaps with Service Workers, PWAs, Notifications, Project Fugu, and structural solutions to the governance problems that held back progress. All of these projects have been motivated by the logic of platform competition, and the urgency that comes from understanding that that web doesn't have a natural constituency.[8]

If you've read any of my writing over this time, it will be unsurprising that this is why I eventually had to break silence and call out what Apple has done on iOS, and what Facebook and Android have done to more quietly undermine browser choice.

These gatekeepers are kneecapping the web in different, but overlapping and reinforcing ways. There's much more to say here, but I've tried to lay out the landscape over the past few years,. But even if we break open the necessary 80/20 capabilities and restart engine competition, today's web is unlikely to succeed on mobile.

You Do It To Yourself, And That's What Really Hurts #

Web developers and browsers have capped the web's mobile potential by ensuring it will feel terrible on the phones most folks have. A web that can win is a web that doesn't feel like sludge. And today it does.

This failure has many fathers. Browsers have not done nearly enough to intercede on users' behalf; hell, we don't even warn users that links they tap on might take them to sites that lock up the main thread for seconds at a time!

Things have gotten so bad that even the extremely weak pushback on developer excess that Google's Core Web Vitals effort provides is a slow-motion earthquake. INP, in particular, is forcing even the worst JS-first lemon vendors to retreat to the server — a tacit acknowledgement that their shit stinks.

So this is the strategic logic of why web performance matters in 2024; for the web to survive, it must start to grow on mobile. For that growth to start, we need the web to be a credible way to deliver these sorts of 80/20 capability-enabled mobile experiences with not-trash performance. That depends both on browsers that don't suck (we see you, Apple) and websites that don't consistently lock up phones and drain batteries.

Toolchains and communities that retreat into the numbing comfort of desktop success are a threat to that potential.

There's (much) more for browsers to do here, but developers that want the web to succeed can start without us. Responsible, web-ecology-friendly development is more than possible today, and the great news is that it tends to make companies more money, too!

The JS-industrial-complex culture that pooh-poohs responsibility is self-limiting and a harm to our collective potential.

Groundhog Day #

Nobody has ever hired me to work on performance.

It's (still) on my plate because terrible performance is a limiting factor on the web's potential to heal and grow. Spending nearly half my time diagnosing and remediating easily preventable failure is not fun. The teams I sit with are not having fun either, and goodness knows there are APIs I'd much rather be working on instead.

My work today, and for the past 8 years, has only included performance because until it's fixed the whole web is at risk.

It's actually that dire, and the research I publish indicates that we are not on track to cap our JS emissions or mitigate them with CPUs fast enough to prevent ecosystem collapse.

Contra the framework apologists, pervasive, preventable failure to deliver usable mobile experiences is often because we're dragging around IE 9 compat and long toolchains premised on outdated priors like a ball and chain.[9]

Reboot #

Things look bad, and I'd be remiss if I didn't acknowledge that it could just be too late. Apple and Google and Facebook, with the help of a pliant and credulous JavaScript community, might have succeeded where 90s-era Microsoft failed — we just don't know it yet.

But it seems equally likely that the web's advantages are just dormant. When browser competition is finally unlocked, and when web pages aren't bloated with half a megabyte of JavaScript (on average), we can expect a revolution. But we need to prepare for that day and do everything we can to make it possible.

Failure and collapse aren't pre-ordained. We can do better. We can grow the web again. But to do that, the frontend community has to decide that user experience, the web's health, and their own career prospects are more important than whatever JS-based dogma VC-backed SaaS vendors are shilling this month.

My business card says "Product Manager" which is an uncomfortable fudge in the same way "Software Engineer" was an odd fit in my dozen+ years on the Chrome team.

My job on both teams has been somewhat closer to "Platform Strategist for the Web". But nobody hires platform strategists, and when they do, it's to support proprietary platforms. The tactics, habits of mind, and ways of thinking about platform competition for open vs. closed platforms could not be more different. Indeed, I've seen many successful proprietary-platform folks try their hand at open systems and bounce hard off the different constraints, cultures, and "soft power" thinking they require.

Doing strategy on behalf of a collectively-owned, open system is extremely unusual. Getting paid to do it is almost unheard of. And the job itself is strange; because facts about the ecosystem develop slowly, there isn't a great deal to re-derive from current events.

Companies also don't ask strategists to design and implement solutions in the opportunity spaces they identify. But solving problems is the only way to deliver progress, so along with others who do roughly similar work, I have camped out in roles that allow arguments about the health of the web ecosystem to motivate the concrete engineering projects necessary to light the fuse of web growth.

Indeed, nobody asked me to work on web performance, just as nobody asked me to develop PWAs, in the same way that nobody asked me to work on the capability gap between web and native. Each one falls out of the sort of strategy analysis I'm sharing in this post for the first time. These projects are examples of the sort of work I think anyone would do once they understood the stakes and marinated in the same data.

Luckily, inside of browser teams, I've found that largely to be true. Platform work attracts long-term thinkers, and those folks are willing to give strategy analysis a listen. This, in turn, has allowed the formation of large collaborations (like Project Fugu and Project Sidecar to tackle the burning issues that pro-web strategy analysis yields.

Strategy without action isn't worth a damn, and action without strategy can easily misdirect scarce resources. It's a strange and surprising thing to have found a series of teams (and bosses) willing to support an oddball like me that works both sides of the problem space without direction.

So what is it that I do for a living? Whatever working to make the web a success for another generation demands. ↩︎

Just how bad is it?

This table shows the mobile Core Web Vitals scores for every production site listed on the Next.js showcase web page as of Oct 2024. It includes every site that gets enough traffic to report mobile-specific data, and ignores sites which no longer use Next.js:[10]

Mobile Core Web Vitals statistics for Next.js sites from Vercel's showcase, as well as the fraction of mobile traffic to each site. The last column indicates CWV stats (LCP, INP, and CLS) that consistently passed over the past 90 days.

Tap column headers to sort.Site mobile LCP (ms) INP (ms) CLS 90d pass Sonos 70% 3874 205 0.09 1 Nike 75% 3122 285 0.12 0 OpenAI 62% 2164 387 0.00 2 Claude 30% 7237 705 0.08 1 Spotify 28% 3086 417 0.02 1 Nerdwallet 55% 2306 244 0.00 2 Netflix Jobs 42% 2145 147 0.02 3 Zapier 10% 2408 294 0.01 1 Solana 48% 1915 188 0.07 2 Plex 49% 1501 86 0.00 3 Wegmans 58% 2206 122 0.10 3 Wayfair 57% 2663 272 0.00 1 Under Armour 78% 3966 226 0.17 0 Devolver 68% 2053 210 0.00 1 Anthropic 30% 4866 275 0.00 1 Runway 66% 1907 164 0.00 1 Parachute 55% 2064 211 0.03 2 The Washington Post 50% 1428 155 0.01 3 LG 85% 4898 681 0.27 0 Perplexity 44% 3017 558 0.09 1 TikTok 64% 2873 434 0.00 1 Leonardo.ai 60% 3548 736 0.00 1 Hulu 26% 2490 211 0.01 1 Notion 4% 6170 484 0.12 0 Target 56% 2575 233 0.07 1 HBO Max 50% 5735 263 0.05 1 realtor.com 66% 2004 296 0.05 1 AT&T 49% 4235 258 0.18 0 Tencent News 98% 1380 78 0.12 2 IGN 76% 1986 355 0.18 1 Playstation Comp Ctr. 85% 5348 192 0.10 0 Ticketmaster 55% 3878 429 0.01 1 Doordash 38% 3559 477 0.14 0 Audible (Marketing) 21% 2529 137 0.00 1 Typeform 49% 1719 366 0.00 1 United 46% 4566 488 0.22 0 Hilton 53% 4291 401 0.33 0 Nvidia NGC 3% 8398 635 0.00 0 TED 28% 4101 628 0.07 1 Auth0 41% 2215 292 0.00 2 Hostgator 34% 2375 208 0.01 1 TFL "Have your say" 65% 2867 145 0.22 1 Vodafone 80% 5306 484 0.53 0 Product Hunt 48% 2783 305 0.11 1 Invision 23% 2555 187 0.02 1 Western Union 90% 10060 432 0.11 0 Today 77% 2365 211 0.04 2 Lego Kids 64% 3567 324 0.02 1 Staples 35% 3387 263 0.29 0 British Council 37% 3415 199 0.11 1 Vercel 11% 2307 247 0.01 2 TrueCar 69% 2483 396 0.06 1 Hyundai Artlab 63% 4151 162 0.22 1 Porsche 59% 3543 329 0.22 0 elastic 11% 2834 206 0.10 1 Leafly 88% 1958 196 0.03 2 GoPro 54% 3143 162 0.17 1 World Population Review 65% 1492 243 0.10 1 replit 26% 4803 532 0.02 1 Redbull Jobs 53% 1914 201 0.05 2 Marvel 68% 2272 172 0.02 3 Nubank 78% 2386 690 0.00 2 Weedmaps 66% 2960 343 0.15 0 Frontier 82% 2706 160 0.22 1 Deliveroo 60% 2427 381 0.10 2 MUI 4% 1510 358 0.00 2 FRIDAY DIGITAL 90% 1674 217 0.30 1 RealSelf 75% 1990 271 0.04 2 Expo 32% 3778 269 0.01 1 Plotly 8% 2504 245 0.01 1 Sumup 70% 2668 888 0.01 1 Eurostar 56% 2606 885 0.44 0 Eaze 78% 3247 331 0.09 0 Ferrari 65% 5055 310 0.03 1 FTD 61% 1873 295 0.08 1 Gartic.io 77% 2538 394 0.02 1 Framer 16% 8388 222 0.00 1 Open Collective 49% 3944 331 0.00 1 Õhtuleht 80% 1687 136 0.20 2 MovieTickets 76% 3777 169 0.08 2 BANG & OLUFSEN 56% 3641 335 0.08 1 TV Publica 83% 3706 296 0.23 0 styled-components 4% 1875 378 0.00 1 MPR News 78% 1836 126 0.51 2 Me Salva! 41% 2831 272 0.20 1 Suburbia 91% 5365 419 0.31 0 Salesforce LDS 3% 2641 230 0.04 1 Virgin 65% 3396 244 0.12 0 GiveIndia 71% 1995 107 0.00 3 DICE 72% 2262 273 0.00 2 Scale 33% 2258 294 0.00 2 TheHHub 57% 3396 264 0.01 1 A+E 61% 2336 106 0.00 3 Hyper 33% 2818 131 0.00 1 Carbon 12% 2565 560 0.02 1 Sanity 10% 2861 222 0.00 1 Elton John 70% 2518 126 0.00 2 InStitchu 27% 3186 122 0.09 2 Starbucks Reserve 76% 1347 87 0.00 3 Verge Currency 67% 2549 223 0.04 1 FontBase 11% 3120 170 0.02 2 Colorbox 36% 1421 49 0.00 3 NileFM 63% 2869 186 0.36 0 Syntax 40% 2531 129 0.06 2 Frog 24% 4551 138 0.05 2 Inflect 55% 3435 289 0.01 1 Swoosh by Nike 78% 2081 99 0.01 1 Passing % 38% 28% 72% 8% Needless to say, these results are significantly worse than those of responsible JS-centric metaframeworks like Astro or HTML-first systems like Eleventy. Spot-checking their results gives me hope.

Failure doesn't have to be our destiny, we only need to change the way we build and support efforts that can close the capability gap. ↩︎

This phrasing — fraction of time spent, rather than absolute time — has the benefit of not being thirsty. It's also tracked by various parties.

The fraction of "Jobs To Be Done" happening on the web would be the natural leading metric, but it's challenging to track. ↩︎

Web developers aren't the only ones shooting their future prospects in the foot.

It's bewildering to see today's tech press capitulate to the gatekeepers. The Register and The New Stack stand apart in using above-the-fold column inches to cover just how rancid and self-dealing Apple, Google, and Facebook's suppression of the mobile web has been.

Most can't even summon their opinion bytes to highlight how broken the situation has become, or how the alternative would benefit everyone, even though it would directly benefit those outlets.

If the web has a posse, it doesn't include The Verge or TechCrunch. ↩︎

There are rarely KOs in platform competitions, only slow, grinding changes that look "boring" to a tech press that would rather report on social media contratemps.

Even the smartphone revolution, which featured never before seen device sales rates, took most of a decade to overtake desktop as the dominant computing form-factor. ↩︎

The web wasn't always competitive with native on desktop, either. It took Ajax (new capabilities), Moore's Law (RIP), and broadband to make it so. There had to be enough overhang in CPUs and network availability to make the performance hit of the web's languages and architecture largely immaterial.

This is why I continue to track the mobile device and network situation. For the mobile web to take off, it'll need to overcome similar hurdles to usability on most devices.

The only way that's likely to happen in the short-to-medium term is for developers to emit less JS per page. And that is not what's happening. ↩︎

One common objection to metaplatforms like the web is that the look and feel versus "native" UI is not consistent. The theory goes that consistent affordances create less friction for users as they navigate their digital world. This is a nice theory, but in practice the more important question in the fight between web and native look-and-feel is which one users encounter more often.

The dominant experience is what others are judged against. On today's desktops, browsers that set the pace, leaving OSes with reduced influence. OS purists decry this as an abomination, but it just is what it is. "Fixing" it gains users very little.

This is particularly true in a world where OSes change up large aspects of their design languages every half decade. Even without the web's influence, this leaves a trail of unreconciled experiences sitting side-by-side uncomfortably. Web apps aren't an unholy disaster, just more of the same. ↩︎

No matter how much they protest that they love it (sus) and couldn't have succeeded without it (true), the web is, at best, an also-ran in the internal logic of today's tech megacorps. They can do platform strategy, too, and know that that exerting direct control over access to APIs is worth its weight in Californium. As a result, the preferred alternative is always the proprietary (read: directly controlled and therefore taxable) platform of their OS frameworks. API access gatekeeping enables distribution gatekeeping, which becomes a business model chokepoint over time.

Even Google, the sponsor of the projects I pursued to close platform gaps, couldn't summon the organisational fortitude to inconvenience the Play team for the web's benefit. For its part, Apple froze Safari funding and API expansion almost as soon as the App Store took off. Both benefit from a cozy duopoly that forces businesses into the logic of heavily mediated app platforms.

The web isn't suffering on mobile because it isn't good enough in some theoretical sense, it's failing on mobile because the duopolists directly benefit from keeping it in a weakened state. And because the web is a collectively-owned, reach-based platform, neither party has to have their fingerprints on the murder weapon. At current course and speed, the web will die from a lack of competitive vigour, and nobody will be able to point to the single moment when it happened.

That's by design. ↩︎

Real teams, doing actual work, aren't nearly as wedded to React vs., e.g., Preact or Lit or FAST or Stencil or Qwik or Svelte as the JS-industrial-complex wants us all to believe. And moving back to the server entirely is even easier.

React is the last of the totalising frameworks. Everyone else is living in the interoperable, plug-and-play future (via Web Components). Escape doesn't hurt, but it can be scary. But teams that want to do better aren't wanting for off-ramps. ↩︎

It's hard to overstate just how fair this is to the over-Reactors. The (still) more common Create React App

metaframeworkstarter kit would suffer terribly by comparison, and Vercel has had years of browser, network, and CPU progress to water down the negative consequences of Next.js's blighted architecture.Next has also been pitched since 2018 as "The React Framework for the Mobile Web" and "The React Framework for SEO-Friendly Sites". Nobody else's petard is doing the hoisting; these are the sites that Vercel is chosing to highlight, built using their technology, which has consistently advertised performance-oriented features like code-splitting and SSG support. ↩︎