Robert Biggs on why the current IE9 beta underwhelms on CSS3 capabilities:

IE9 is the IE6 of CSS3

There, I said it. Microsoft has been bombarding the media with claims about how much better IE9 is than all the other browsers, more HTML5 and CSS3 compliant than any other browser that ever existing and ever will. It’s the only browser that passes all the tests they made up. And, Microsoft has finally implemented the CSS3 selectors that were implemented by other browsers back in, what? 2003? Because Microsoft has updated IE to support CSS3 selectors and rounded corners, they want us to believe that somehow IE9 magically supports the whole slew of CSS3 visual styling. I’m afraid it doesn’t.... Firefox, Chrome and Safari can render graphically rich interfaces using the sophisticated features of CSS3. IE9 does, well, rounded corners...

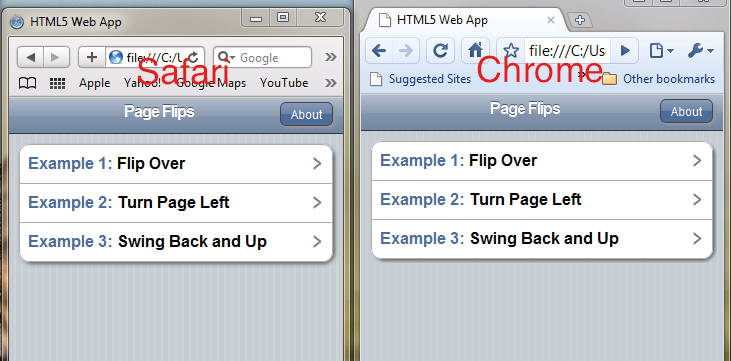

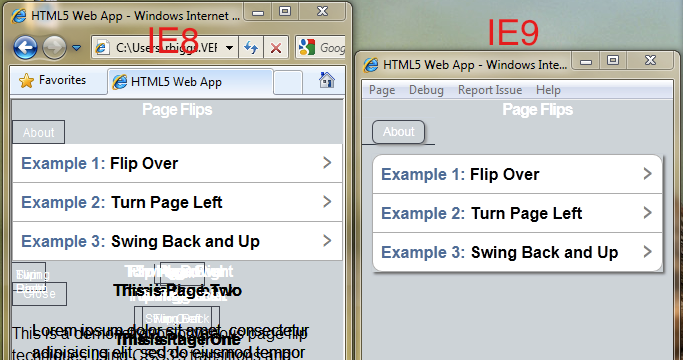

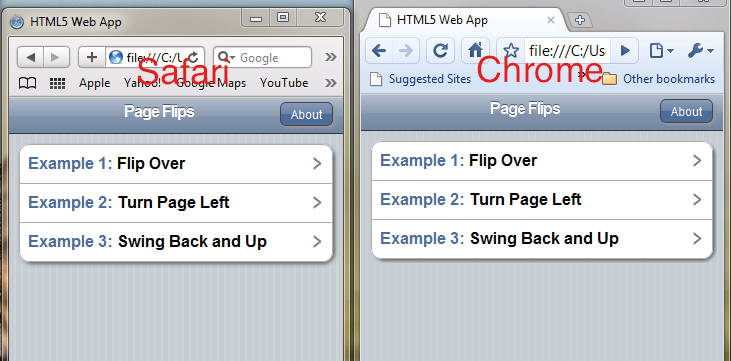

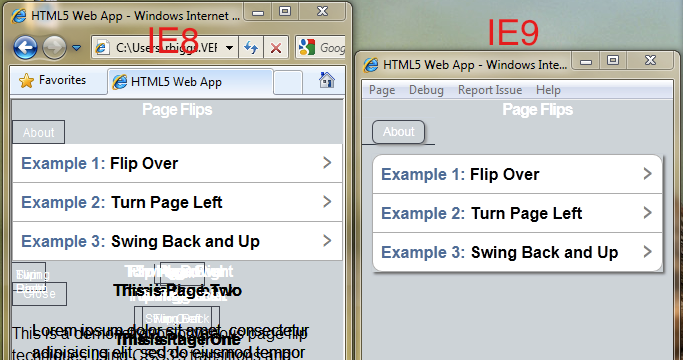

...I created a layout using CSS3 things like flexible box model, box sizing, border radius, box shadow, text shadow, background gradients, multiple backgrounds, background image sizing, transitions, transforms, and made sure that I supplied the IE9 equivalent of what it could handle. Firefox, Chrome and Safari are rendering the layout just fine. Are you ready to see what IE9 will do with it?

...

He goes on in hyperbolic fashion, much of which I disagree with. IE 9 is absolutely an improvement over IE 8 and there's still time for IE 9 to improve before it's finally released, but how long will it be before IE 10 ships?

Progress in the browser isn't about particular versions of browsers, it's about rate of improvement in engines combined with rate of distribution. Given the lack of IE 9 for XP and the IE team's opacity around future releases, it seems we'll still need Chrome Frame for the forseeable future. Ouch.

Web developers tell each other stories about browsers that just aren't true. Consider the following archetypal argments:

It's not hard to support IE because I have [ progressive enhancement | javascript libraries | css tricks ]

Everyone using IE 6 should upgrade. Sheeesh! Anyone running an old browser is doing it all wrong, and how hard can it really be?

Nice theory about not developing for old browsers, buddy, but I can't afford to stop supporting bad browsers and neither can anyone else. This is life on the web, suck it up.

These are the fables we tell each other to help explain a complicated world in self-affirming narrative form. The first boils down to "I can do it!" while the second is "I don't have to do it". The third is an argument about platform reach and the compromises you need to make to get your content everywhere. All are too simple, and all are insidious.

The first argument -- "I can do it!" -- is a coping mechanism gone too far. Should we always be building semantic markup that degrades well, works in down-rev browsers to the extent it can, and trying to fill in the potholes in the road with progressive enhancement? You bet. But lets be clear: the sorts of features that it's going to take to keep the web a competitive platform require new semantics. We need the browser to expose new underlying stuff that can't be faked.

For example, tools like excanvas, dojox.gfx, and Raphaël let us draw vector graphics everywhere.

Do not mistake a little for a lot.

These tools, possibility expanding as they are, can't give us the order of magnitude improvements we will get out of GPU accelerated 2D and 3D contexts. If you build with the constraints of the old browsers in mind using tools designed for them, you won't be building the same sorts of things you would be if you could assume otherwise. Consider the costs: Instead of writing a little bit of script to deal with a <canvas> element directly, you now assume a sizable JavaScript library that adds overhead to each call, not to mention the overhead in latency to download that script. That's to say nothing of the sorts of things you won't even attempt because your wrapper doesn't support or because you can't do enough operations per second on legacy browsers.

The next argument -- "I don't have to do it" -- ignores upgrade costs. I've written about this at eye watering length. This perspective has two common sources: looking at a small sliver of the browser market (e.g., mobile), and incomplete accounting of the costs involved in upgrades. Looking at only one part of the market is actually sane in the short run. Building for only one part of the market makes sense, so long as you can take out insurance (e.g., standards) that will help guarantee full market coverage over time. This is the most hopeful story in the whole HTML5 debate to me. You can focus (variously) on mobile, tablet, or Chrome Web Store and never leave the HTML5 bubble. And it gets bigger every day: IE 9 soon, new versions of every other browser even sooner, GCF, etc. As for the folks who can't understand why IT departments might not jump at the chance to throw out something that's working and/or might not be keen on user re-training...I've got nothing. Things that are costly will happen less. That's life. Also, please don't assume that by flagging these costs, I'm "excusing" organizations that are running IE6. It's a needlessly adversarial connotation. We need instead to explain reality as it is and find ways to move toward "better" from there. That's why I work on Chrome Frame. You might not agree with the approach, but the economic problem is real and we need a solution.

Lastly, the jaded realist. This is a perspective I know well. I wake up many mornings feeling this way, and who wouldn't after most of a decade spent trying to make browsers do things they were never designed to? What this story writes out, though, is any discussion of how we might improve the situation. It's easy to do. Focusing on the next month, or even the next year, you might get the sense that the browser landscape is more-or-less fixed. Hell, browser stats move in terms of less than a percent a month. But we'd be wrong to assume that slow is zero and that because things are slow now that they must be in the future. So to all you hard-bitten realists out there: I get it. I know what you mean. You don't have to believe that help is on the way. I only ask that when it arrives, you take just as much ferocious advantage of the new reality as you do of the current one.

The web is moving again -- finally -- and if we can find clear ways of thinking about the future, we can find easier paths to getting there. Focusing on the economics of browser adoption gives us a way to integrate all of these fables into our theory of the world, explaining them and showing their strengths and faults. It's for that reason I'm hopeful: approaches such as focusing on mobile and tools like Chrome Frame address the root causes of our mire, and that's something new under the sun.