Cache and Prizes

Serious Platforms Don't Play Favourites

If you work on a browser, you will often hear remarks like, Why don't you just put [popular framework] in the browser?

This is a good question — or at least it illuminates how browser teams think about tradeoffs. Spoiler: it's gnarly.

Before we get into it, let's make the subtext of the proposal explicit:

- Libraries provided this way will be as secure and privacy-preserving as every other browser-provided API.

- Browsers will cache tools popular among vocal, leading-edge developers.

- There's plenty of space for caching the most popular frameworks.

- Developers won't need to do work to realise a benefit.

None of this holds.

The best available proxy data also suggests that shared caches would have a minimal positive effect on performance. So, it's an idea that probably won't work the way anyone wants it to, likely can't do much good, and might do a lot of harm.[1]

Understanding why requires more context, and this post is an attempt to capture the considerations I've been outlining on whiteboards for more than a decade.

Trust Falls #

Every technical challenge in this design space is paired with an even more daunting governance problem. Like other successful platforms, the web operates on a finely tuned understanding of risk and trust, and deprecations are particularly explosive.[2] As we will see, pressure to remove some libraries to make space for others will be intense.

That's the first governance challenge: who will adjudicate removals? Browser vendors? And if so, under what rules? The security implications alone mean that whomever manages removals will need to be quick, fair, and accurate. Thar be dragons.

Removal also creates a performance cliff for content that assumes cache availability. Vendors have a powerful incentive to "not break the web ". Since cache sizes will be limited (more on that in a second), the predictable outcome will be slower library innovation. Privileging a set of legacy frameworks will create disincentives to adopt modern platform features and reduce the potential value of modern libraries. Introducing these incentives would be an obvious error for a team building a platform.

Entropic Forces #

An unstated, but ironclad, requirement of shared caches is that they are uniform, complete, and common.

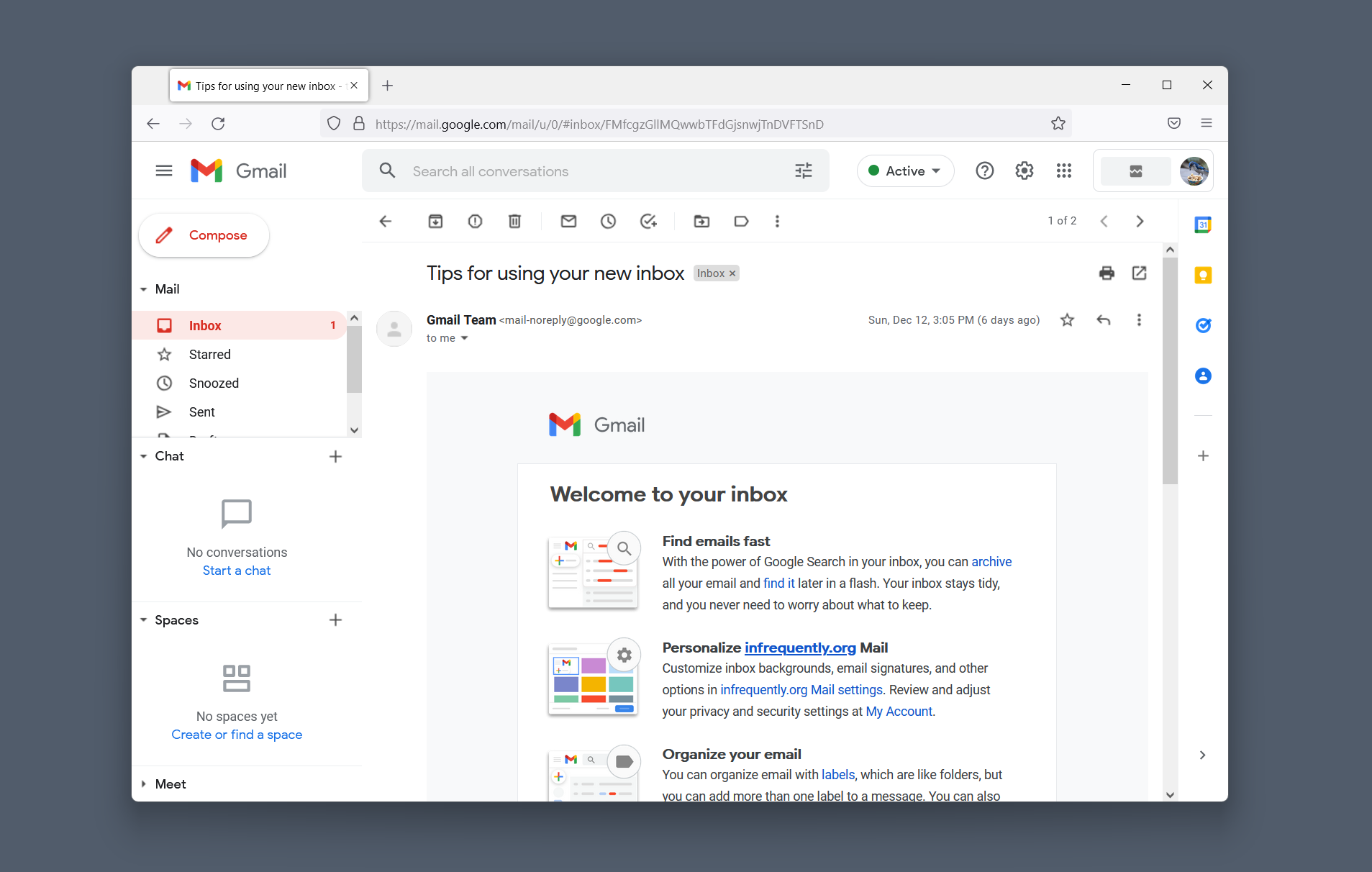

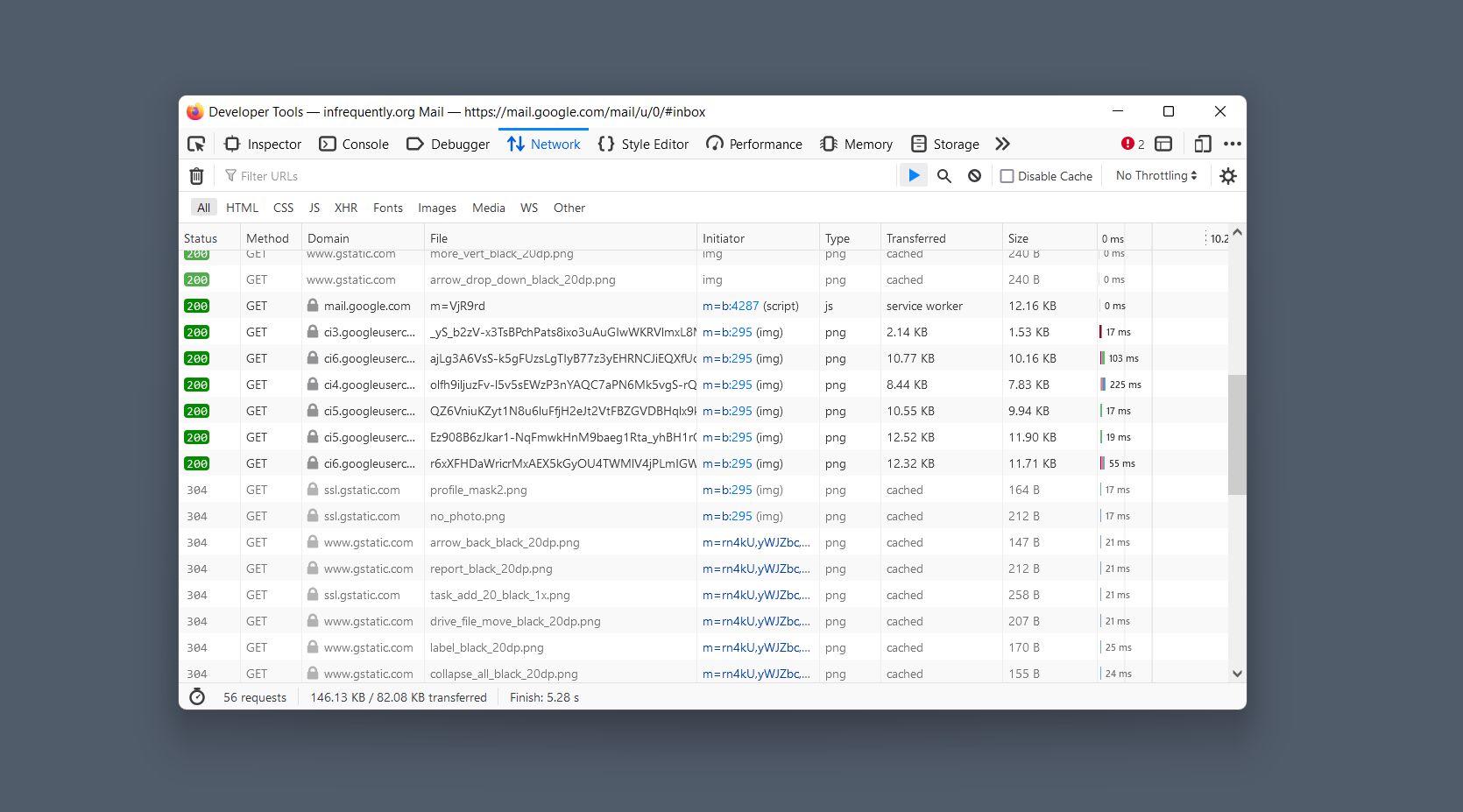

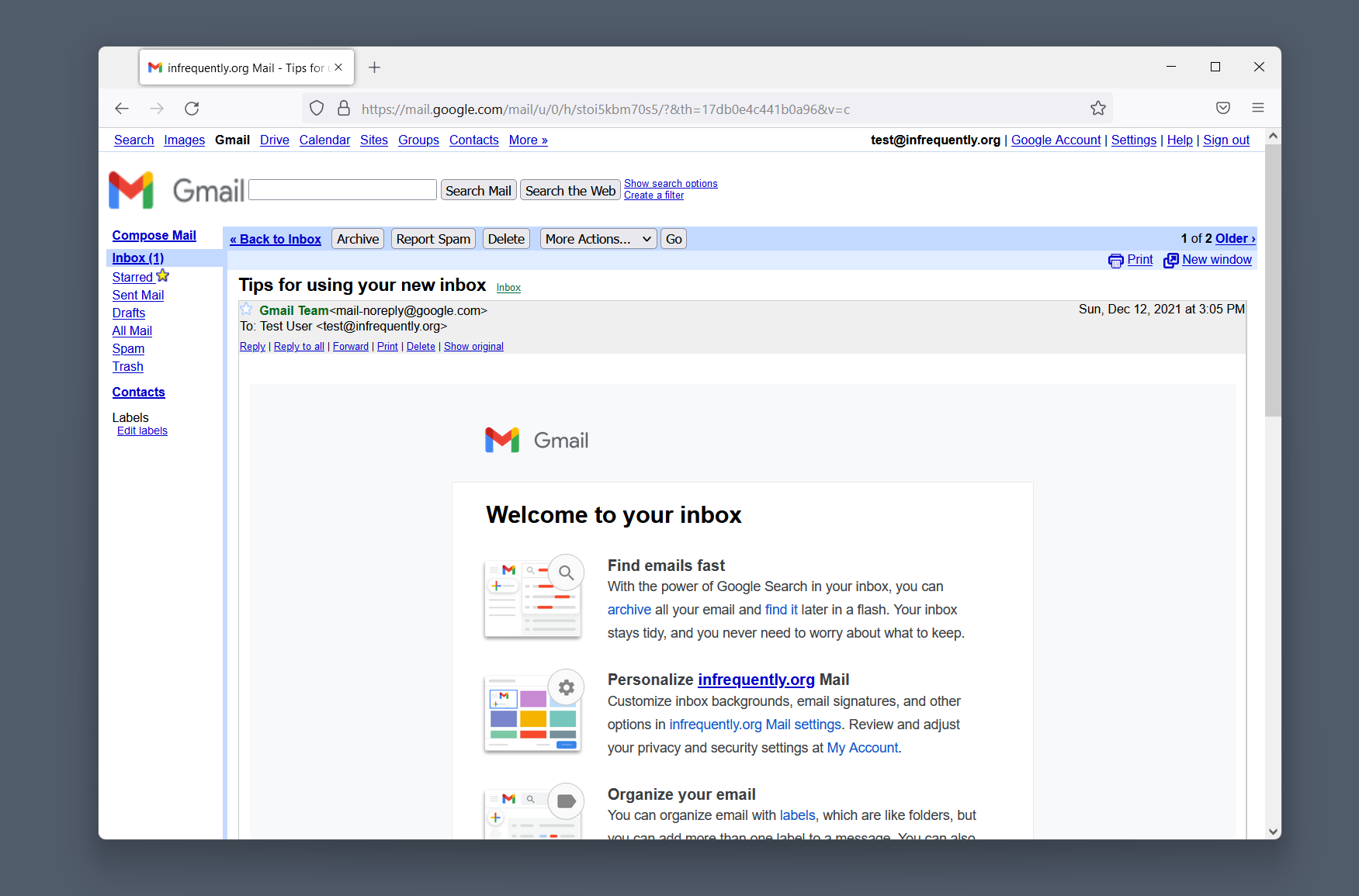

Browsers now understand the classic shared HTTP cache behaviour as a privacy bug.

Cache timing is a fingerprinting vector that is fixed via cache partitioning by origin. In the modern cache-partitioned world, if a user visits alice.com, which fetches https://example.com/cat.gif, then visits bob.com, which displays the same image, it will be requested again. Partitioning by origin ensures that bob.com can't observe any details about that user's browsing history. De-duplication can prevent multiple copies on disk, but we're out of luck on reducing network costs.

A shared library cache that isn't a fingerprinting vector must work like one big zip file: all or nothing. If a resource is in that bundle — and enough users have the identical bundle — using a resource from it on both alice.com and bob.com won't leak information about the user's browsing history.

Suppose a user has only downloaded part of the cache. A browser couldn't use resources from it, lest missing resources (leaked through a timing side channel) uniquely identify the user. These privacy requirements put challenging constraints on the design and pratical effectiveness of a shared cache.

Variations on a Theme #

But the completeness constraint is far from our only design challenge. When somebody asks, Why don't you just put [popular framework] in the browser?

, the thoughtful browser engineer's first response is often which versions?

This is not a dodge.

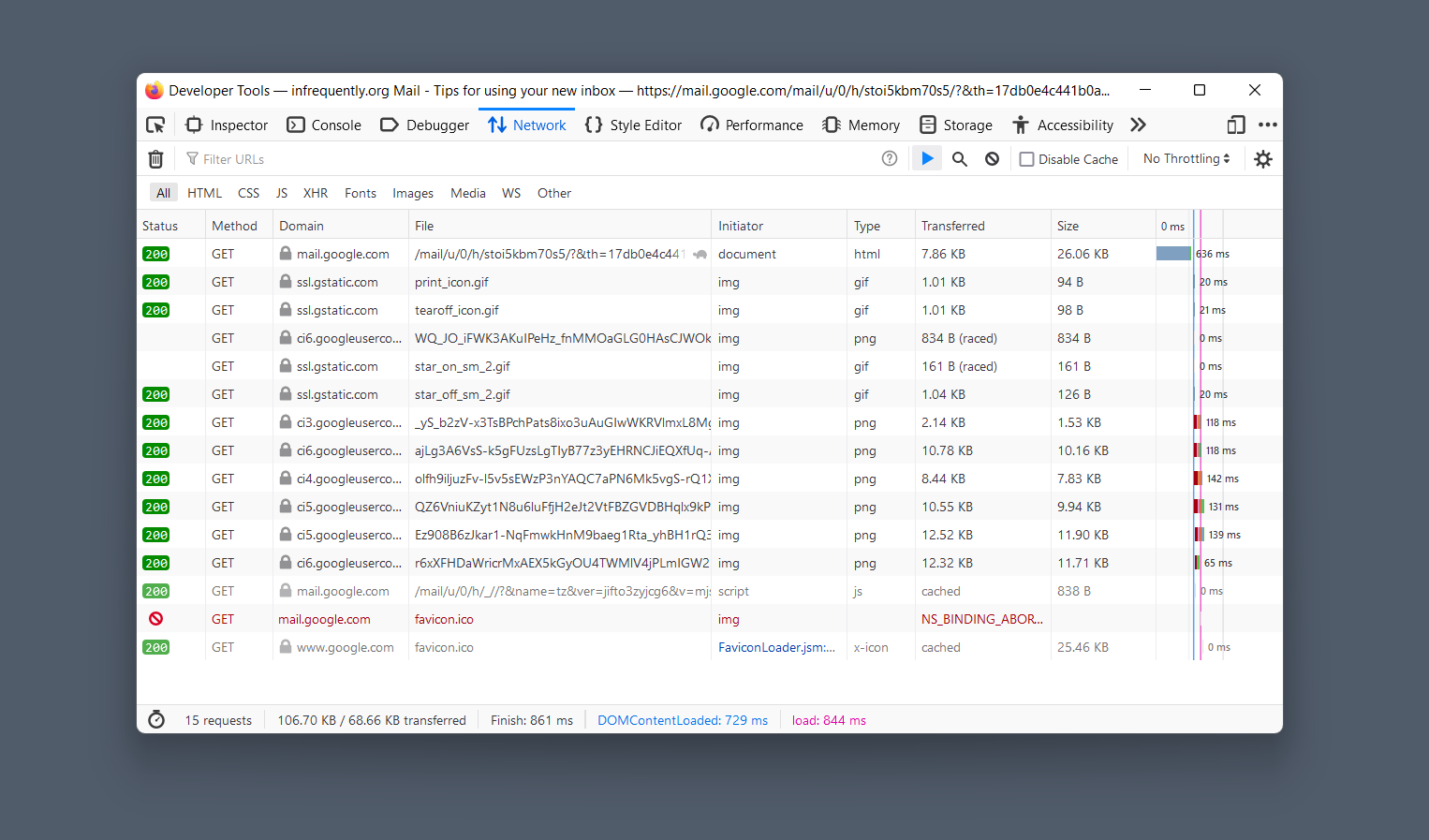

The question of precaching JavaScript libraries has been around since the Prototype days. It's such a perennial idea that vendors have looked into the real-world distribution of library use. One available data source is Google's hosted JS libraries service.

Last we looked, jQuery (still the most popular JS tool by a large margin) showed usage almost evenly split between five or six leading versions, with a long tail that slowly tapers. This reconfirms observations from the HTTP Archive, published by Steve Souders in 2013.

TL;DR?: Many variants of the same framework will occur in the cache's limited space. Because a few "unpopular" legacy tools are large, heavily used, and exhibit flat distribution of use among their top versions, the breadth of a shared cache will be much smaller than folks anticipate.

The web is not centrally managed, and sites depend on many different versions of many different libraries. Browsers are unable to rely on semantic versioning or trust file names because returned resources must contain the exact code that developers request. If browsers provide a similar-but-slightly-different file, things break in mysterious ways, and "don't break the web" is Job #1 for browsers.

Plausible designs must avoid keying on URLs or filenames. Another sort of opt-in is required because:

- It's impossible to capture most use of a library with a small list of URLs.

- Sites will need to continue to serve fallback copies of their dependencies.[3]

- File names are not trustworthy indicators of file contents.

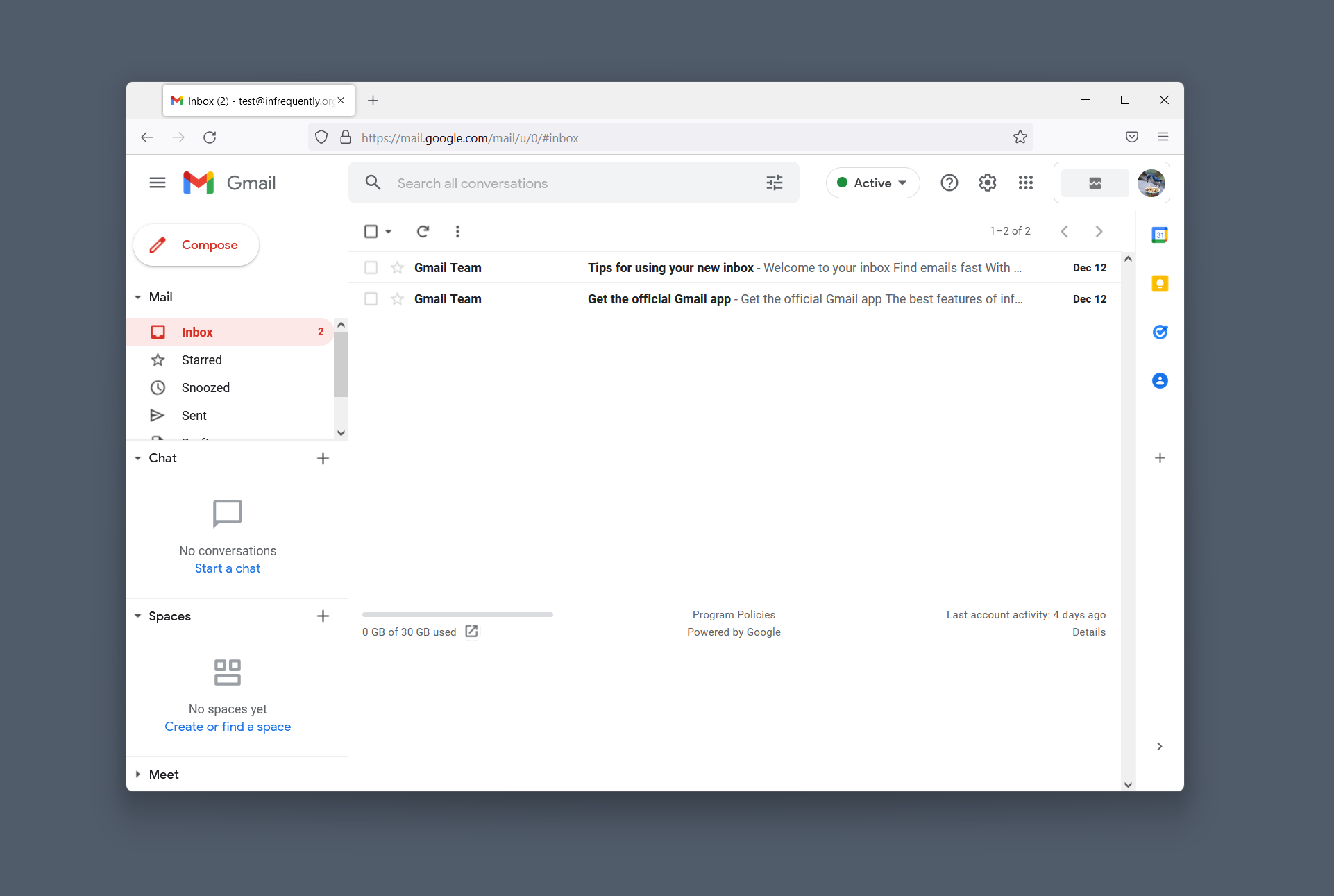

Subresource Integrity (SRI) to the rescue! SRI lets developers add a hash of the resource they're expecting as a security precaution, but we could re-use these assertions as a safe cache key. Sadly, relatively few sites deploy SRI today, with growth driven by a single source (Shopify), meaning developers aren't generally adopting it for their first-party dependencies.

It turns out this idea has been circulating for a while. Late in the drafting of this post, it was pointed out to me by Frederik Braun that Brad Hill had considered the downsides of a site-populated SRI addressable cache back in 2016. Since that time, SRI adoption has yet to reach a point where it can meaningfully impact cache hit rates. Without pervasive SRI, it's difficult to justify the potential download size hit of a shared cache, not to mention the ongoing required updates.

The size of the proposed cache matters to vendors because browser binary size increases negatively impact adoption. The bigger the download, the less likely users are to switch browser. The graphs could not be more explicit: downloads and installs fail as binaries grow.

Browser teams aggressively track and manage browser download size, and scarce engineering resources are spent to develop increasingly exotic mechanisms to distribute only the code a user needs. They even design custom compression algorithms to make incremental patch downloads smaller. That's how much wire size matters. Shared caches must make sense within this value system.

Back of the Napkin #

So what's a reasonable ballpark for a maximum shared cache? Consider:

- Browser engineers are byte misers.

- That's because any cache would need to be sized to the expected free space of the marginal (P95) user.

- Firefox for Android is between 70 and 85 MiB in compressed APK form.

- Chrome for Android varies in size between 55 and 75 MiB.

- Release-over-release growth is often less than 1 MiB.

- Cache growth will be difficult and slow.

The last point is crucial because it will cement the cache's contents for years, creating yet another governance quagmire. The space available for cache growth will be what's left for the marginal user after accounting for increases in the browser binary. Most years, that will be nothing. Browser engineers won't give JS bundles breathing room they could use to win users.

Given these constraints, it's impossible to imagine a download budget larger than 20 MiB for a cache. It would also be optional (not bundled with the browser binary) and perhaps fetched on the first run (if resources are available). Having worked on browsers for more than a decade, I think it would be shocking if a browser team agreed to more than 10 MiB for a feature like this, especially if it won't dramatically speed up the majority of existing websites. That is unlikely given the need for SRI annotations or the unbecoming practice of speeding up high-traffic sites, but not others. These considerations put tremendous downward pressure on the prospective size budget for a cache.

20 MiB over the wire expands to no more than 100 MiB of JS with aggressive Brotli compression. A more likely 5 MiB (or less) wire size budget provides something like ~25 MiB of on-disk size. Assuming no source maps, this may seem like a lot, but recall that we need to include many versions of popular libraries. A dozen versions of jQuery, jQuery UI, and Moment (all very common) burn through this space in a hurry.

One challenge for fair inclusion is the tension between site-count-weighting and traffic-weighting. Crawler-based tools (like the HTTP Archive's Almanac and BuiltWith) give a distorted picture. A script on a massive site can account for more traffic than many thousands of long-tail sites. Which way should the policy go? Should a cache favour code that occurs in many sites (the long tail), or the code that has the most potential to improve the median page load?

Thar be more dragons.

Today's Common Libraries Will Be Underrepresented #

Anyone who has worked on a modern JavaScript front end is familiar with the terrifyingly long toolchains that dominate the landscape today. Putting aside my low opinion of the high costs and slow results, these tools all share a crucial feature: code motion.

Modern toolchains ensure libraries are transformed and don't resemble the files that arrive on disk from npm. From simple concatenation to the most sophisticated tree-shaking, the days of downloading library.min.js and SFTP-ing one's dependencies to the server are gone. A side effect of this change has been a reduction in the commonality of site artifacts. Even if many sites depend on identical versions of a library, their output will mix that code with bits from other tools in ways that defeat matching hashes.

Advocates for caches suggest this is solvable, but it is not — at least in any reasonable timeframe or in a way that is compatible with other pressures outlined here. Frameworks built in an era that presumes transpilers and npm may never qualify for inclusion in a viable shared cache.

Common Sense #

Because of privacy concerns, caches will be disabled for a non-trivial number of users. Many folks won't have enough disk space to include a uniform and complete version, and the cache file will be garbage collected under disk pressure for others.

Users at the "tail" tend not to get updates to their software as often as users in the "head" and "torso" of the distribution. Multiple factors contribute, including the pain of updates on slow networks and devices, systems that are out of disk space, and harmful interactions with AV software. One upshot is that browsers will need to delete or disable shared caches for users in this state so they don't become fingerprinting vectors. A simple policy would be to remove caches from service after a particular date, creating a performance cliff that disproportionately harms those on the slowest devices.

First, Do No Harm #

Code distributed to every user is a tax on end-user resources, and because caches must be uniform, complete, and common, they will impose a regressive tax. The most enfranchised users with the most high-performance devices and networks will feel their initial (and ongoing) download impacted the least. In contrast, users on the margins will pay a relatively higher price for any expansions of the cache over time and any differential updates to it.

Induced demand is real, and it widens inequality, rather than shrinking it.

If a shared cache is to do good, it must do good for those in the tail and on the margins, not make things worse for them.

For all of the JS ecosystem's assertions that modern systems are fast enough to support the additional overhead of expensive parallel data structures, slow diffing algorithms, and unbounded abstractions, nearly all computing growth has occurred over the past decade at the low end. For most of today's users, the JS community's shared assumptions about compute and bandwidth abundance have been consistently wrong.

The dream of a global, shared, user-subsidised cache springs from the same mistaken analysis about the whys and wherefores of client-side computing. Perhaps one's data centre is post-scarcity, and maybe the client side will be too, someday. But that day is not today. And it won't be this year either.

Cache Back #

Speaking of governance, consider that a shared cache would suddenly create disincentives to adopt anything but the last generation of "winner" libraries. Instead of fostering innovation, the deck would be stacked against new and innovative tools that best use the underlying platform. This is a double disincentive. Developers using JS libraries would suffer an additional real-world cost whenever they pick a more modern option, and browser vendors would feel less pressure to integrate new features into the platform.

As a strategy to improve performance for users, significant questions remain unanswered. Meanwhile, such a cache poses a clear and present danger to the cause of platform progress. The only winners are the makers of soon-to-be obsolete legacy frameworks. No wonder they're asking for it.

Own Goals #

At this point, it seems helpful to step back and consider that the question of resource caching may have different constituencies with different needs:

- Framework Authors may be proposing caching to reduce the costs to their sites or users of their libraries.

- End Users may want better caching of resources to speed up browsing.

For self-evident security and privacy reasons, browser vendors will be the ones to define the eventual contents of a shared cache and distribute it. Therefore, it will be browser imperatives that drive its payload. This will lead many to be surprised and disappointed at the contents of a fair, shared global cache.

First, to do best by users, the cache will likely be skewed away from the tools of engaged developers building new projects on the latest framework because the latest versions of libraries will lack the deployed base to qualify for inclusion. Expect legacy versions of jQuery and Prototype to find a much established place in the cache than anything popular in "State Of" surveys.

Next, because it will be browser vendors that manage the distribution of the libraries, they are on the hook for creating and distributing derivative works. What does this mean? In short, a copyright and patent minefield. Consider the scripts most likely to qualify based on use: code for embedding media players, analytics scripts, and ad network bootstraps. These aren't the scripts that most people think of when they propose that "browsers should ship with the top 1 GiB of npm built-in", but they are the sorts of code that will have the highest cache hit ratios.

Also, they're copyrighted and unlikely to be open-source, creating legal headaches that no amount of wishing can dispell.

Browsers build platform features through web standards, not because it's hard to agree on technical details (although it is), but because vendors need the legal protections that liberally licensed standards provide.[4] These protections are the only things standing between hordes of lawyers and the wallets of folks who build on the web platform. Even OSS isn't enough to guarantee the sorts of patent license and pooling that Standards Development Organisations (SDOs) like the W3C and IETF provide.

A reasonable response would be to have caches constrain themselves to non-copyleft OSS, rendering them less effective.

And, so we hit bedrock. If the goal isn't to make things better for users at the margins, why bother? Serving only the enfranchised isn't what the web is about. Proposals that externalise governance and administrative costs are also unattractive to browser makers. Without a credible plan for deprecation and removal, why wade into this space? It's an obvious trap.

"That's Just Standardisation With Extra Steps!" #

Another way to make libraries smaller is to upgrade the platform. New platform features usually let developers remove code, which can reduce costs. This is in the back of browser engineers minds when asked, "Why don't you just put Library X in the browser?".

A workable cache proposal features the same problems as standards development:

- Licensing limitations

- Challenging deprecations

- Opt-in to benefit

- Limits on what can be added

- Difficulties agreeing about what to include

Why build this new tool when the existing ones are likely to be as effective, if not more so?

Compression is a helpful lens for thinking about caches and platform APIs. The things that platforms integrate into the de facto computing base are nouns and verbs. As terms become common, they no longer need to be explained every time.

Requests to embed libraries into the web's shared computing base is a desire to increase their compression ratio.

Shared precaching is an inefficient way to accomplish the goal. If we can identify common patterns being downloaded frequently, we can modify the platform to include standardised versions. Either way, developers need to account for situations when the native implementations aren't available (polyfilling).

Given that a shared cache system will look nothing like the dreams of those who propose them, it's helpful to instead ask why browser vendors are moving so slowly to improve the DOM, CSS, data idioms, and many other core areas of the platform in response to the needs expressed by libraries.

Thoughtful browser engineers are right to push back on shared caches, but the slow pace of progress in the DOM (at the hands of Apple's under-funding of the Safari team) has been a collective disaster. If we can't have unicorns, we should at least be getting faster horses.

Is There a Version That Could Work? #

Perhaps, but it's unlikely to resemble anything that web developers want. First, let's re-stipulate the constraints previously outlined:

- Sites will need to opt-in.

- Caches can't be both large and fair.

- Caches will not rev or grow quickly.

- Caches will mainly comprise different versions of "unpopular", legacy libraries.

To be maximally effective, we might want a cache to trigger for sites that haven't opted-in via SRI. A bloom filter could elide SRI annotations for high-traffic files, but this presents additional governance and operational challenges.

Only resources served as public and immutable (as observed by a trusted crawler) can possibly be auto-substituted. A browser that is cavalier enough to attempt to auto-substitute under other conditions deserves all of the predictable security vulnerabilities it will create.

An auto-substitution URL list will take space, and must also be uniform, complete, and common for privacy reasons. This means that the list itself is competing for space with libraries. This creates real favouritism challenges.

A cache designed to do the most good will need mechanisms to work against induced demand. Many policies could achieve this, but the simplest might be for vendors to disable caches for developers and users on high-end machines. We might also imagine adding scaled randomness to cache hits: the faster the box, the more often a cache hit will silently fail.

Such policies won't help users stuck at the tail of the distribution, but might add a pinch of balance to a system that could further slow progress on the web platform.

A workable cache will also need a new governance body within an existing OSS project or SDO. The composition of such a working group will be fraught. Rules that ensure representation by web developers and browser vendors (not framework authors) can be postulated, but governance will remain challenging. How security researchers and users on the margins are represented are open problems.

So, could we add a cache? If all the challenges and constraints outlined above are satisfied, maybe. But it's not where I'd recommend anyone who wants to drive the web forward invest their time — particularly if they don't relish chairing a new, highly contentious working group.

Thanks to Eric Lawrence, Laurie Voss, Fred K. Schott, Frederik Braun, and Addy Osmani for their insightful comments on drafts of this post.

My assessment of the potential upside of this sort of cache is generally negative, but in the interest of fairness, I should outline some ways in which pre-caching scripts could enable them to be sped up:

- Scripts downloaded this way can be bytecode cached on the device (at the cost of some CPU burn), but this will put downward pressure on both the size of the cache (as bytecode takes 4-8× the disk space of script text) and on cache growth (time spent optimizing potentially unused scripts is a waste).

- The benefits of download time scale with the age of the script loading technique. For example, using a script from a third-party CDN requires DNS, TCP, TLS, and HTTP handshaking to a new server, all of which can be shortcut. The oldest sites are the most likely to use this pattern, but are also most likely to be unmaintained.

Case in point: it was news last year when the Blink API owners[5] approved a plan to deprecate and remove the long-standing

alert(),confirm(), andprompt()methods from within cross-origin<iframe>s. Not all<iframe>s would be affected, and top-level documents would continue to function normally. The proposal was scapular — narrowly tailored to address user abuse while reducing collateral damage.The change was also shepherded with care and caution. The idea was floated in 2017, aired in concrete form for more than a year, and our friends at Mozilla spoke warmly of it. WebKit even implemented the change. This deprecation built broad consensus and was cautiously introduced.

It blew up anyway.[6]

Overnight, influential web developers — including voices that regularly dismiss the prudential concerns of platform engineers — became experts in platform evolution, security UX, nested event loops in multi-process applications, Reverse Origin Trials, histograms, and Chromium's metrics. More helpfully, collaboration with affected enterprise sites is improving the level of collective understanding about the risks. Changes are now on hold until the project regains confidence through this additional data collection.

This episode and others like it reveal that developers expect platforms to be conservative. Their trust in browsers comes from the expectation that the surface they program to will not change, particularly regarding existing and deployed code.

And these folks weren't wrong. It is the responsibility of platform stewards to maintain stability. There's even a helpful market incentive attached: browsers that don't render all sites don't have many users. The fast way to lose users is to break sites, and in a competitive market, that means losing share. The compounding effect is for platform maintainers to develop a (sometimes unhelpful) belief that moving glacially is good per se.

A more durable lesson is that, like a diamond, anything added to the developer-accessible surface of a successful platform may not be valuable — but it is forever.[7] ↩︎

With the advent of H/2 connection re-use and partitioned caches, hosting third-party libraries has become an anti-pattern. It's always faster to host files from your server, which means a shared cache shouldn't encourage users to centralise on standard URLs for hosted libraries, lest they make the performance of the web even worse when the cache is unavailable. ↩︎

For an accessible introduction to the necessity of SDOs and recent history of modern technical standard development, I highly recommend Open Standards and the Digital Age by Andrew L. Russell (no relation). ↩︎

Your humble narrator serves as a Blink API OWNER and deserves his share of the blame for the too-hasty deprecation of

alert(),confirm(), andprompt().In Blink, the buck stops with us, not the folks proposing changes, and this was a case where we should have known that our lingering "enterprise blindness" [6:1] in the numbers merited even more caution, despite the extraordinary care taken by the team. ↩︎

Responsible browser projects used to shoot from the hip when removing features, which often led to them never doing it due to the unpredictability of widespread site breakage.

Thankfully, this is no longer the case, thanks to the introduction of anonymised feature instrumentation and metrics. These data sets are critical to modern browser teams, powering everything from global views of feature use to site-level performance reporting and efforts like Core Web Vitals.

One persistent problem has been what I've come to think of as "enterprise blindness".

In the typical consumer scenario, users are prompted to opt-in to metrics and crash reporting on the first run. Even if only a relatively small set of users participate, the Law of Large Numbers ensures our understanding of these histograms is representative across the billions of pages out there.

By contrast, enterprises roll out software for their users and push policy configurations to machines that generally disable metrics reporting. The result is that these users and the sites they frequent are dramatically under-reported in the public stats.

Given the available data, the team deprecating cross-origin

<iframe>prompts was being responsible. But the data had a blind spot, one whose size has been maddeningly difficult to quantify. ↩︎ ↩︎Forever, give or take half a decade. ↩︎