Towards a Unified Theory of Web Performance

Note: This post first ran as part of Sergey Chernyshev and Stoyan Stefanov's indispensible annual series. It's being reposted here for completeness, but if you care about web performance, make sure to check out the whole series and get subscribed to their RSS feed to avoid missing any of next year's posts.

In a recent Perf Planet Advent Calendar post, Tanner Hodges asked for what many folks who work in the space would like for the holidays: a unified theory of web performance.

I propose four key ingredients:

- Definition: What is "performance" beyond page speed? What, in particular, is "web performance"?

- Purpose: What is web performance trying to accomplish as a discipline? What are its goals?

- Principles: What fundamental truths are guiding the discipline and moving it forward?

- Practice: What does it look like to work on web performance? How do we do it?

This is a tall order!

A baseline theory, doctrine, and practicum represent months of work. While I don't have that sort of space and time at the moment, the web performance community continues to produce incredible training materials, and I trust we'll be able to connect theory to pracice once we roughly agree on what web performance is and what it's for.

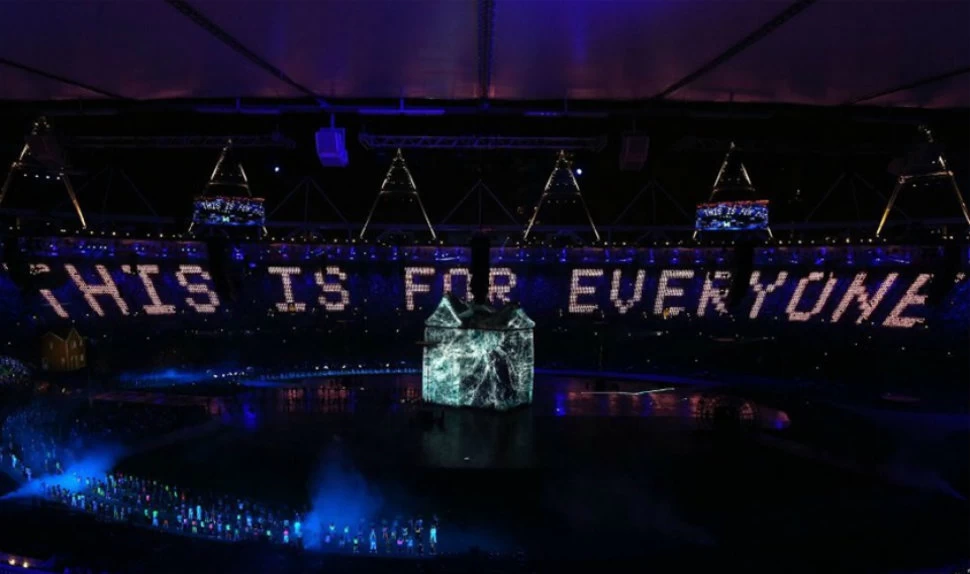

This Is for Everyone #

Embedded in the term "web performance" is the web, and the web is for humans.

That assertion might start an argument in the wrong crowd, but 30+ years into our journey, attempts to promote a different first-order constituency are considered failures, as the Core Platform Loop predicts. The web ecosystem grows or contracts with its ability to reach people and meet their needs with high safely and low friction.

Taking "this is for everyone" seriously, aspirational goals for web performance emerge. To the marginal user, performance is the difference between access and exclusion.

The mission of web performance is to expand access to information and services.

Page Load Isn't Special #

It may seem that web performance comprises two disciplines:

- Optimising page load

- Optimising post-load interactions

The tools of performance investigators in each discipline overlap to some degree but generally feel like separate concerns. The metrics that we report against implicitly cleave these into different "camps", leaving us thinking about pre- and post-load as distinct universes.

But what if they aren't?

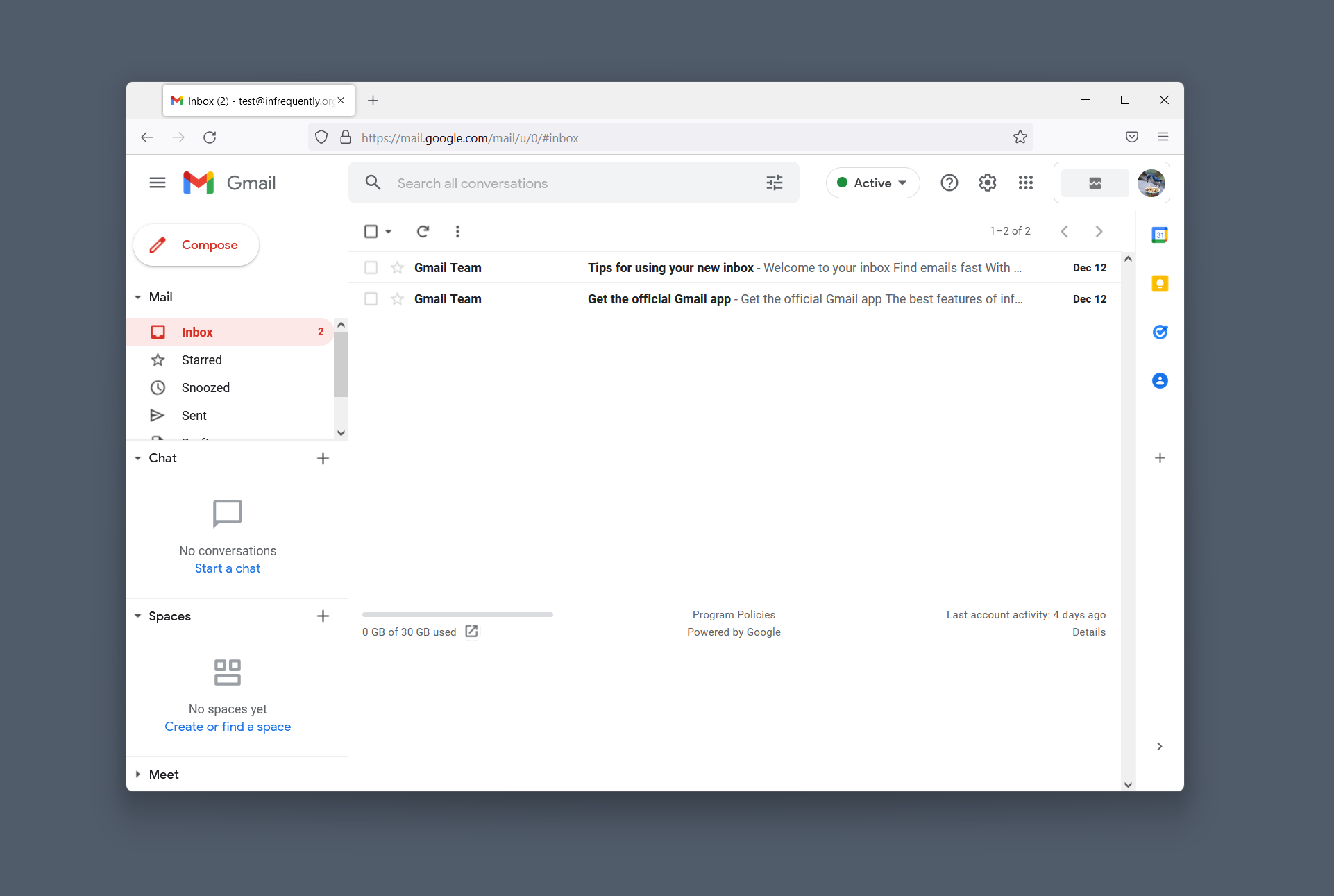

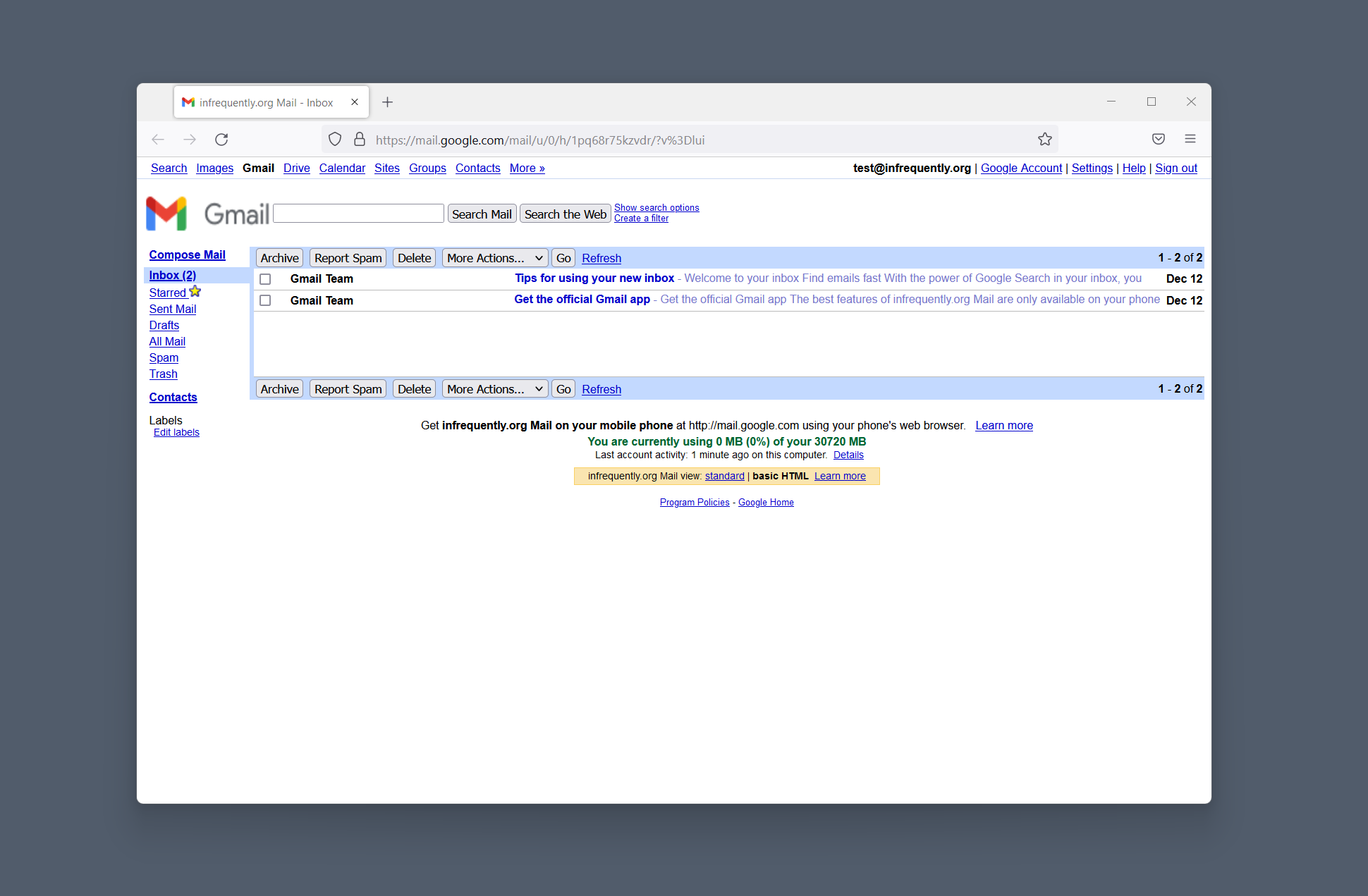

Consider the humble webmail client.

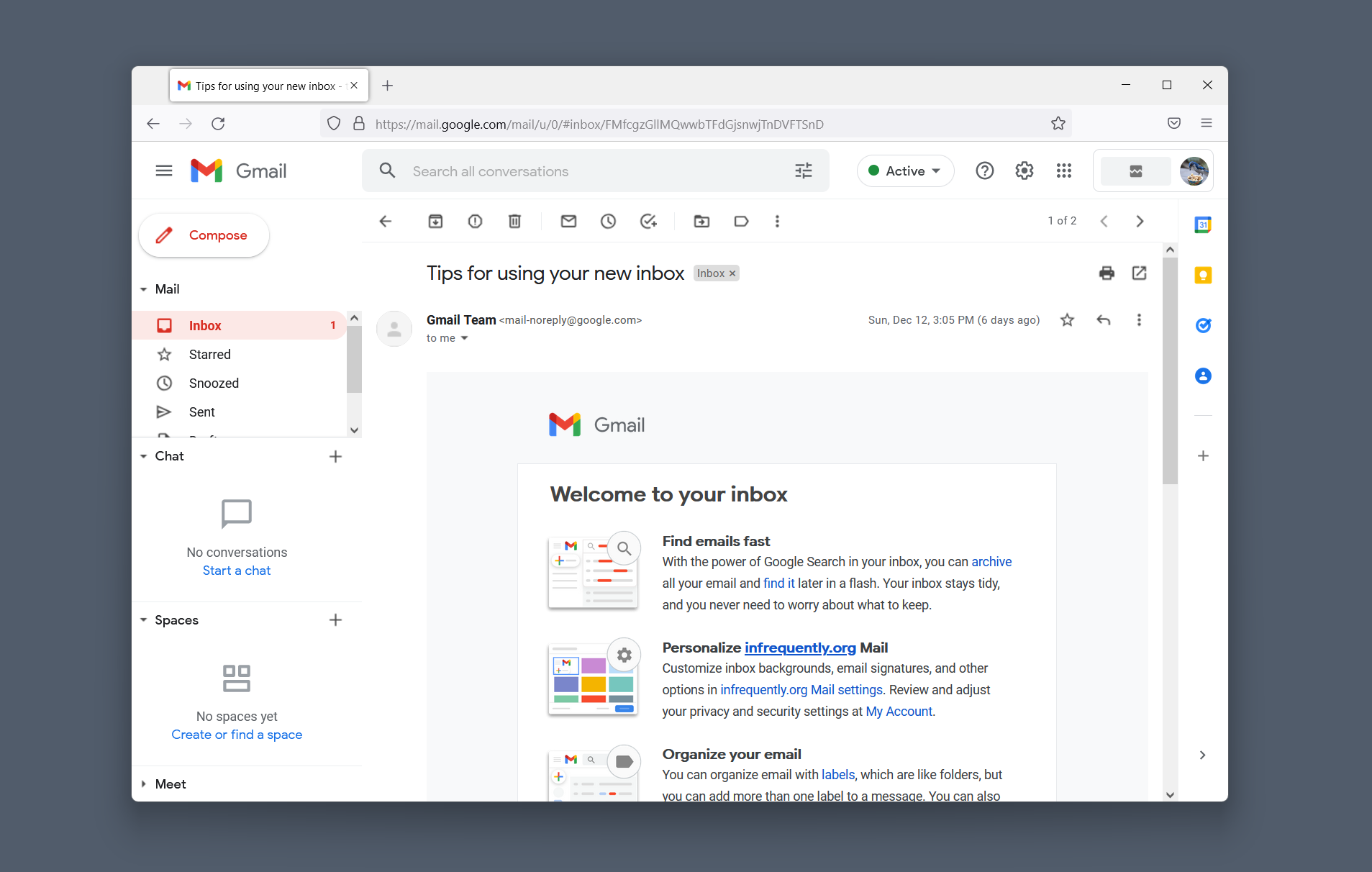

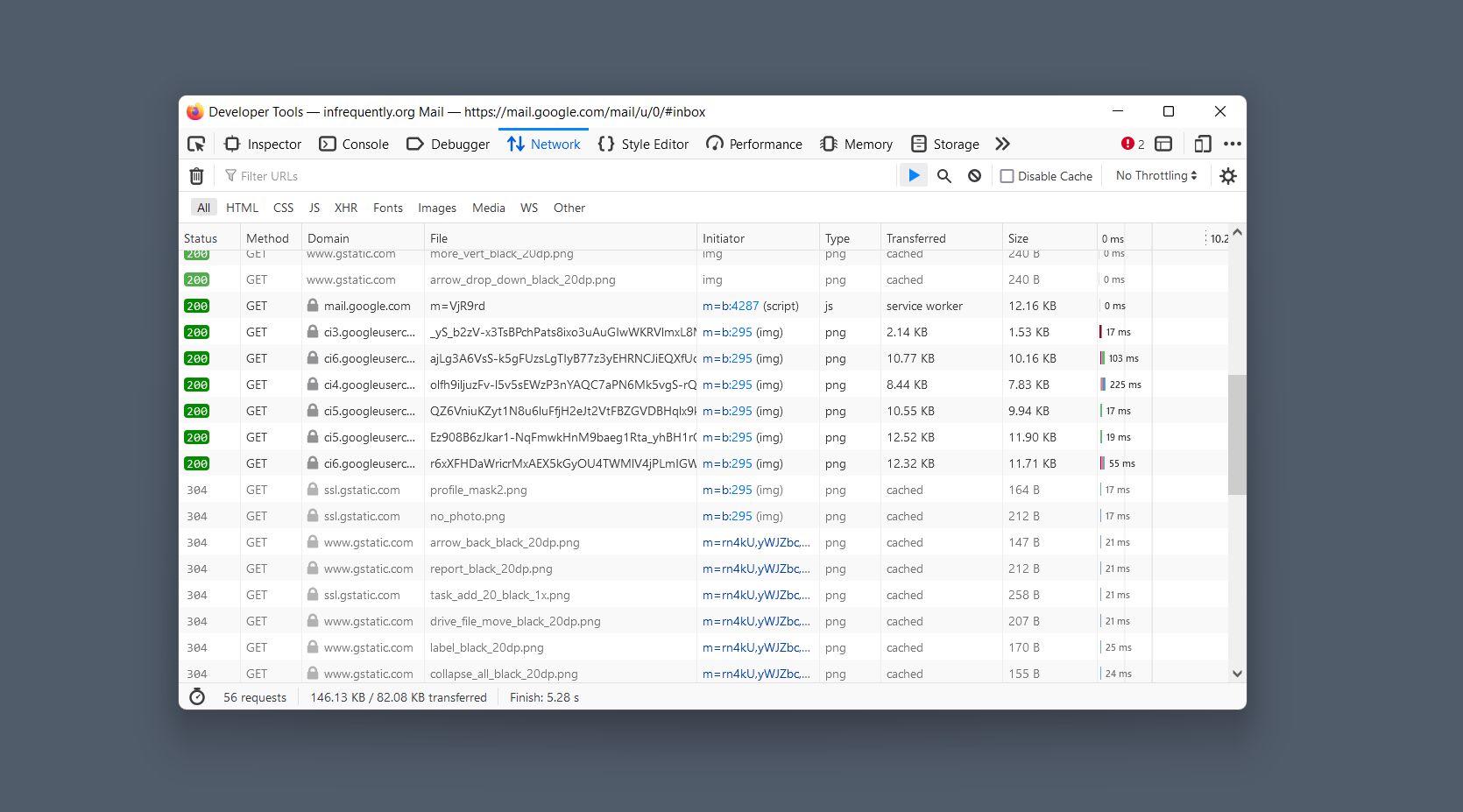

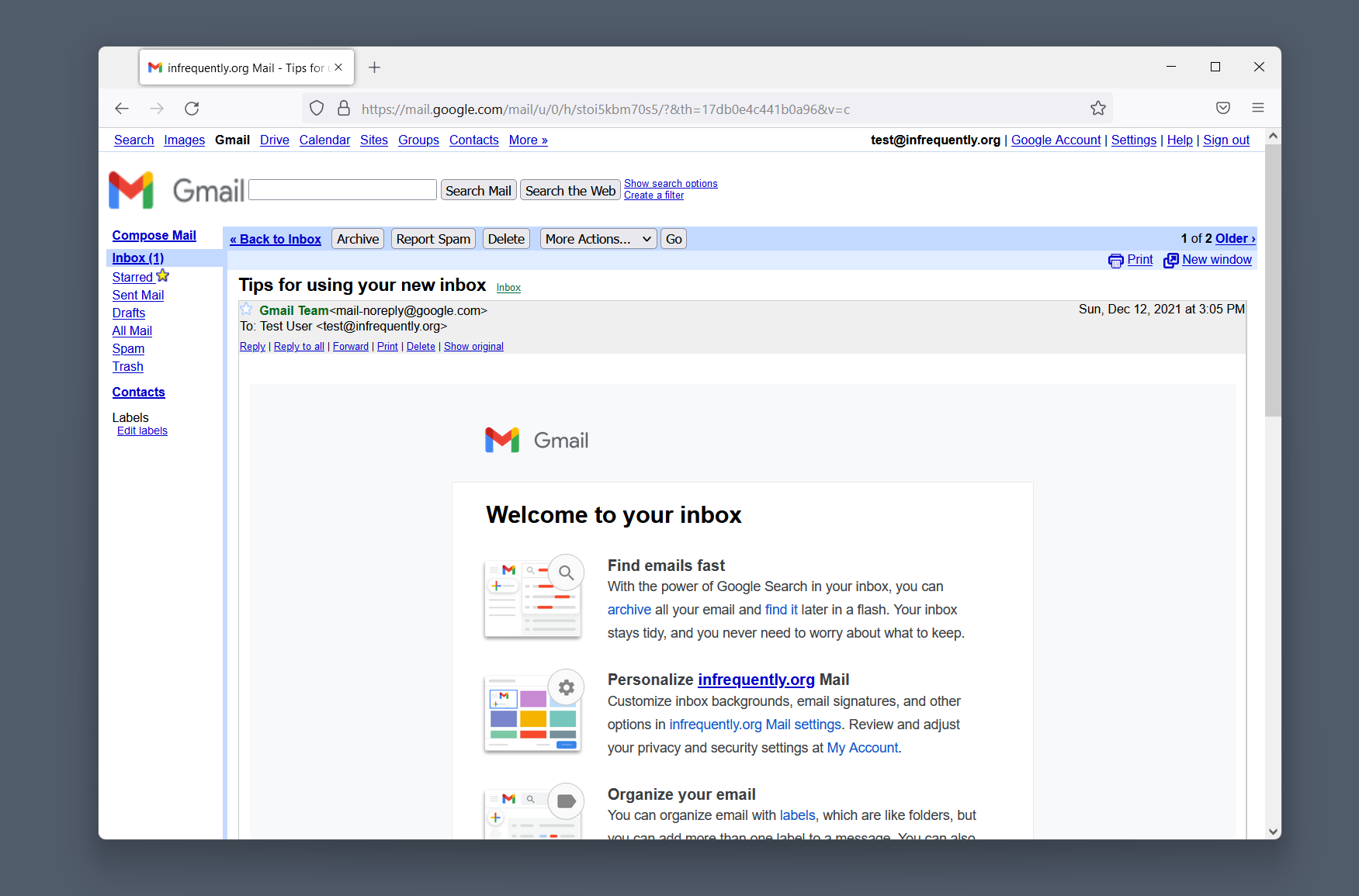

Here are two renderings of the same Gmail inbox in different architectural styles: one based on Ajax, and the other on "basic" HTML:

The difference in weight between the two architectures is interesting, but what we should focus on is the per interaction loop. Typing gmail.com in the address bar, hitting Enter, and becoming ready to handle the next input is effectively the same interaction in both versions. One of these is better, and it isn't the experience of the "modern" style.

These steps inform a general description of the interaction loop:

- The system is ready to receive input.

- Input is received and processed.

- Progress indicators are displayed.

- Work starts; progress indicators update.

- Work completes; output is displayed.

- The system is ready to receive input.

Tradeoffs In Depth #

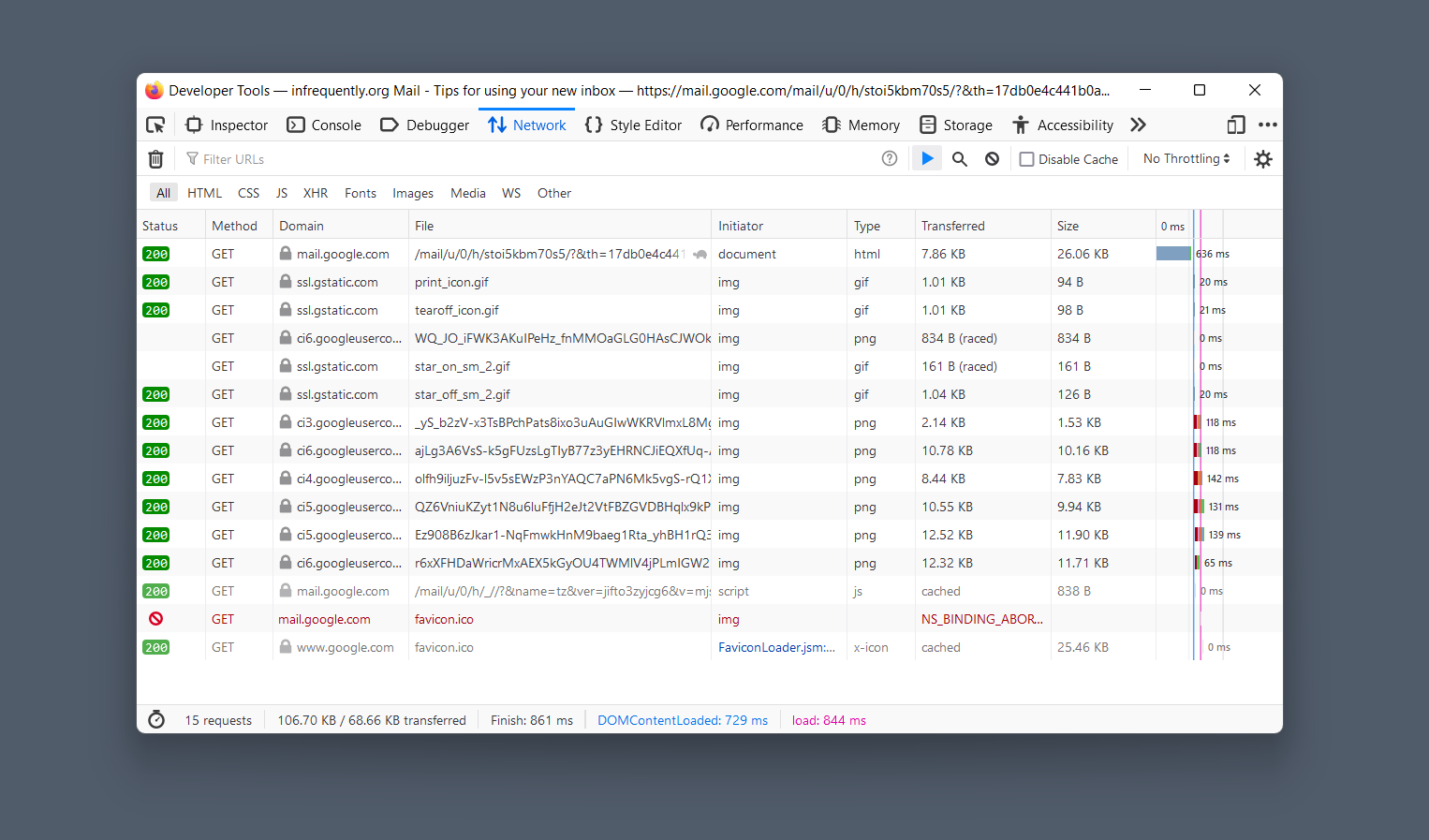

Consider the next step of our journey, opening the first message. The Ajax version leaves most of the UI in place, whereas the HTML version performs a full page reload. Regardless of architecture, Gmail needs to send an HTTP request to the server and update some HTML when the server replies. The chief effect of the architectural difference is to shift the distribution of latency within the loop.

Some folks frame performance as a competition between Team Local (steps 2 & 3) and Team Server (steps 1 & 4). Today's web architecture debates (e.g. SPA vs. MPA) embody this tension.

Team Local values heap state because updating a few kilobytes of state in memory can, in theory, involve less work to return to interactivity (step 5) while improving the experience of steps 2 and 3.

Intuitively, modifying a DOM subtree should generate less CPU load and need less network traffic than tearing down the entire contents of a document, asking the server to compose a new one, and then parsing/rendering it along with all of its subresources. Successive HTML documents tend to be highly repetitive, after all, with headers, footers, and shared elements continually re-created from source when navigating between pages.

But is this intuitive understanding correct? And what about the other benefits of avoiding full page refreshes, like the ability to animate smoothly between states?

Herein lies our collective anxiety about front-end architectures: traversing networks is always fraught, and so we want to avoid it being jarring. However, the costs to deliver client-side logic that can cushion the experience from the network latency remain stubbornly high. Improving latency for one scenario often degrades it for another. Despite partisan protests, there are no silver bullets; only complex tradeoffs that must be grounded in real-world contexts — in other words, engineering.

As a community, we aren't very good at naming or talking about the distributional effects of these impacts. Performance engineers have a fluency in histograms and percentiles that the broader engineering community could benefit from as a lens for thinking about the impacts of design choices.

Given the last decade of growth in JavaScript payloads, it's worth resetting our foundational understanding of these relative costs. Here, for instance, are the network costs of transitioning from the inbox view of Gmail to a message:

Objections to the comparison are legion.

First, not all interactions within an email client modify such a large portion of the document. Some UI actions may be lighter in the Ajax version, especially if they operate exclusively in the client-side state. Second, while avoiding a full-page refresh, steps 2, 3, and 4 in our interaction loop can be communicated with greater confidence and in a less jarring way. Lastly, by avoiding an entire back-and-forth with the server for all UI states, it's possible to add complex features — like chat and keyboard accelerators — in a way that doesn't incur loss of context or focus.

The deeper an app's session length and the larger the number of "fiddly" interactions a user may perform, the more attractive a large up-front bundle can be to hide future latency.

This insight gives rise to a second foundational goal for web performance:

We expand access by reducing latency and variance across all interactions in a user's session to more reliably return the system to an interactive state.

For sites with low interaction depths and short sessions, this implies that web performance engineering might remove as much JavaScript and client-side logic as possible. For other, richer apps, performance engineers might add precisely this sort of payload to reduce session-depth-amortised latency and variance. The tradeoff is contextual and informed by data and business goals.

No silver bullets, only engineering.

Medians Don't Matter #

Not all improvements are equal. To understand impacts, we must learn to think in terms of distributions.

Our goal is to minimise latency and variance in the interactivity loop... but for whom? Going back to our first principle, we understand that performance is the predicate to access. This points us in the right direction. Performance engineers across the computing industry have learned the hard way that the sneaky, customer-impactful latency is waaaaay out in the tail of our distributions. Many teams have reported making performance better at the tail only to see their numbers get worse upon shipping improvements. Why? Fewer bouncing users. That is, more users who get far enough into the experience for the system to boot up in order to report that things are slow (previously, those users wouldn't even get that far).

Tail latency is paramount. Doing better for users at the median might not have a big impact on users one or two sigmas out, whereas improving latency and variance for users at the 75th percentile ("P75") and higher tend to make things better for everyone.

As web performance engineers, we work to improve the tail of the distribution (P75+) because that is how we make systems accessible, reliable, and equitable.

A Unified Theory #

And so we have the three parts of a uniform mission, or theory, of web performance:

- The mission of web performance is to expand access to information and services.

- We expand access by reducing latency and variance across all interactions in a user's session to more reliably return the system to an interactive state.

- We work to improve the tail of the distribution (P75+) because that is how we make systems accessible, reliable, and equitable.

Perhaps a better writer can find a pithier way to encapsulate these values.

However they're formulated, these principles are the north star of my performance consulting. They explain tensions in architecture and performance tradeoffs. They also focus teams more productively on marginal users, which helps to direct investigations and remediation work. When we focus on getting back to interactive for the least enfranchised, the rest tends to work itself out.